CSAT vs. NPS vs. CES: How to Calculate Plus the Pros and Cons of Each

For customer experience (CX), call center, and IT help desk leaders, measuring customer satisfaction is about gaining insights that help you make better decisions. Choosing the right customer experience metric matters. It can mean the difference between fixing problems as they happen and preventing them before they occur.

Three main metrics dominate the customer experience world:

- Customer Satisfaction Score (CSAT)

- Net Promoter Score (NPS)

- Customer Effort Score (CES)

Each one gives you a different view of customer sentiment. Yet many CX organizations struggle to determine which metric best suits their needs. The wrong choice results in wasted resources, misleading insights, and missed chances to improve customer service delivery.

This guide compares and contrasts CSAT, NPS, and CES. When you understand the strengths, limits, and best use cases for each approach, you can build a measurement system that genuinely reflects your customers' experience.

The result is real improvements noticed across the board, and that's worth investing in. Let's dive into CSAT vs NPS vs CES.

What is CSAT?

Customer Satisfaction Score (CSAT) asks a simple question: "How satisfied were you with your experience?" It is probably the most straightforward way to measure customer sentiment.

This simply makes it one of the most popular customer experience metrics across every sector, especially any type of customer service team, CX, and equally, IT teams and outsourced providers.

-

Purpose of CSAT

CSAT works as a transactional metric. It captures immediate customer reactions to specific interactions or touchpoints. For CX leaders, this might mean measuring satisfaction after resolving a problem for a customer or upgrading them to a new service.

In IT, it's worth asking this after a support ticket is resolved, or after a software deployment. This question's primary purpose is to give you real-time feedback on an individual experience, a specific interaction between a customer and the person who helped them. It doesn't evaluate the overall relationship between customers and your organization.

-

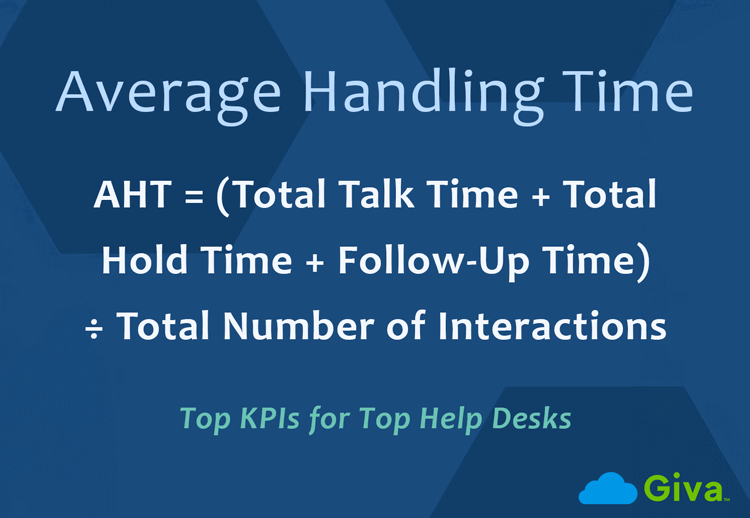

How CSAT is Calculated

CSAT typically uses a rating scale. The most common is 1 to 5, where 1 means "very unsatisfied" and 5 means "very satisfied." In other cases, some organizations use a 1-10 scale. Or you can use a simple satisfied/unsatisfied option.

CSAT Formula

(Number of satisfied customers / Total responses) x 100

Usually, satisfied customers are those who rate 4 or 5 on a 5-point scale.

Here's an example:

- You receive 80 responses to a post-ticket survey.

- 64 customers rate their experience as 4 or 5.

- Your CSAT score would be 80%.

Many CX software platforms can trigger CSAT surveys automatically after closing tickets. This makes data collection seamless and consistent.

CSAT Benchmarks

- Good: 75-85%

- Excellent: 90%+

- Industry average: ~80% across industries

-

Pros and Cons of CSAT

Next, we need to consider the pros and cons of CSAT.

Pros

- CSAT is simple and clear. The straightforward nature makes it easy for customers to respond. It's also easy for teams to understand. Because the question is simple and appears right after an interaction, CSAT surveys typically get better response rates than longer surveys.

- The transactional nature gives you actionable insights. You can identify specific pain points in particular processes. You can spot issues with individual team members. CSAT can be used at multiple touchpoints throughout the customer journey. This flexibility is valuable.

- The widespread use of CSAT makes benchmarking easier. You can compare your performance against industry standards without much trouble.

Cons

- CSAT has limited predictive value. A satisfied customer today doesn't necessarily mean loyalty or future business. Customers typically focus on the most recent part of their experience. They don't think about the overall interaction. This is called recency bias.

- Scoring tendencies vary a lot across different cultures and regions, which makes global comparisons complicated. A score alone doesn't explain why customers feel the way they do. You need additional qualitative feedback for context.

- CSAT has a short-term focus. It captures moments rather than measuring the cumulative relationship with your services.

-

Common CSAT Mistakes and How to Avoid Them

-

Treating CSAT as a Long-Term Loyalty Indicator

CSAT measures short-term satisfaction, not enduring relationships. Relying on it for loyalty decisions can create false confidence.

Solution: Use CSAT for transactional feedback and pair it with NPS or CES for a broader view of loyalty and effort.

-

Asking Too Many Questions

Expanding a simple CSAT survey into multiple questions reduces completion rates and distorts results.

Solution: Keep it to one clear question and an optional comment field. The goal is quick, specific insight, not an exhaustive review.

-

Ignoring Timing and Context

Sending CSAT surveys too late or at irrelevant points produces inaccurate data.

Solution: Trigger CSAT immediately after interactions, such as ticket closures, upgrades, or resolutions, while the experience is still fresh.

-

Now, let's look at NPS.

What is NPS?

Net Promoter Score (NPS) tries to answer a more strategic question: How likely are customers to actively recommend your services to others?

This takes a different approach. It tries to measure customer loyalty and the likelihood of advocacy. Fred Reichheld developed NPS in 2003. It has become a standard metric across numerous sectors, especially customer service, and also IT and software.

-

Purpose of NPS

Unlike CSAT's transactional focus, NPS takes a big-picture approach. It aims to gauge the overall relationship between your organization and its customers.

For IT leaders, this metric shows whether your technology services create genuine business value. Or if they just meet minimum expectations. In internal IT contexts, NPS can reveal how business units view IT. Do they see IT as a strategic partner? Or as a necessary cost center?

-

How NPS is Calculated

NPS relies on a single question: "On a scale of 0 to 10, how likely are you to recommend our company/product/service to a friend or colleague?"

Based on their responses, customers fall into three categories:

- Promoters (9-10): These are enthusiastic supporters. They actively advocate for your services.

- Passives (7-8): These customers are satisfied but not enthusiastic. They're vulnerable to competitive offerings.

- Detractors (0-6): These are unhappy customers. They may damage your reputation through negative word of mouth.

NPS Formula

% Promoters - % Detractors

Passives count toward the total number of respondents. But they don't directly affect the score. NPS ranges from -100 to +100. A score of -100 means every customer is a Detractor. A score of +100 means every customer is a Promoter, which would be amazing, but virtually impossible to achieve.

Here's an example: Say 50% of respondents are Promoters. 30% are Passives. 20% are Detractors. Your NPS would be 30. That's because 50% minus 20% equals 30.

NPS Benchmarks

- Good: 0-30

- Excellent: 50+

- Industry average: ~10-20 (general)

-

Pros and Cons of NPS

Next, we need to consider the pros and cons of NPS.

Pros

- NPS provides strategic insight. It measures loyalty and relationship quality. It doesn't just look at satisfaction with individual transactions. Like CSAT, the single-question format is easy to implement. It doesn't burden respondents.

- The consistent methodology enables comparisons across departments and business units. You can even compare across industries. The classification into Promoters, Passives, and Detractors helps you prioritize improvement efforts, so you know where to focus.

Cons

- NPS lacks diagnostic detail. It tells you whether customers would recommend you. But it doesn't tell you why they feel that way. The willingness to recommend varies significantly across cultures. This makes global comparisons challenging.

- Without follow-up questions, NPS doesn't give clear direction on what to fix. It has limited actionability. NPS measured after a service failure looks very different than during a positive customer experience.

-

Common NPS Mistakes and How to Avoid Them

-

Using NPS Too Frequently

NPS is meant for periodic relationship assessment, not after every transaction. Overuse leads to fatigue and noisy data.

Solution: Limit NPS to quarterly or semi-annual surveys to gauge sentiment trends without overwhelming customers.

-

Neglecting Follow-Up Questions

The "likelihood to recommend" score alone doesn't explain why customers feel that way.

Solution: Always include a brief follow-up asking what influenced the rating. These insights make NPS actionable.

-

Comparing Scores Across Unrelated Groups

Different industries and cultures interpret the 0-10 scale differently. Global comparisons can mislead leadership.

Solution: Benchmark NPS within your own sector or track internal movement over time rather than chasing external averages.

-

Now, we move onto considering CES.

What is CES?

Customer Effort Score (CES) measures the effort customers must spend to achieve their goals. This might be resolving a technical issue or accessing a service. It came from research with an interesting finding: reducing customer effort is a better predictor of loyalty than maximizing satisfaction. For support leaders focused on efficiency and user experience, CES offers particularly relevant insights.

-

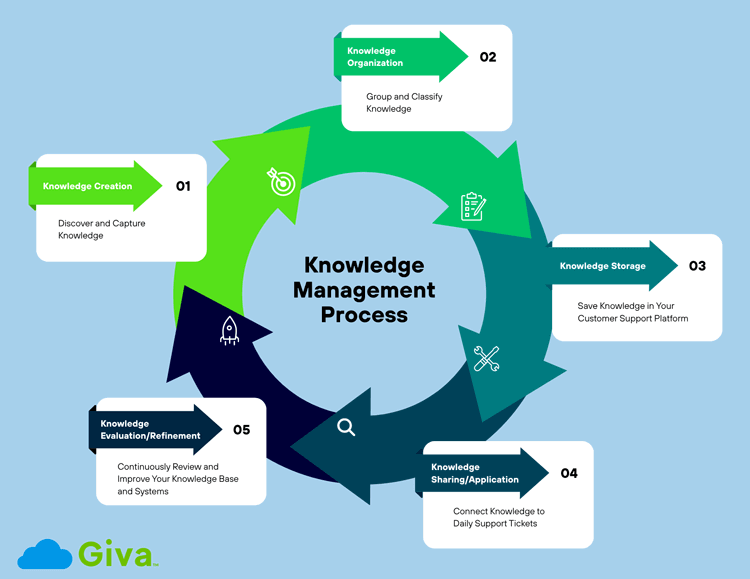

Purpose of CES

The philosophy behind CES is simple: customers value ease and efficiency. Organizations that make things effortless earn loyalty.

-

How CES is Calculated

CES typically asks: "How much effort did you personally have to put forth to handle your request?" Respondents answer using a scale, normally this is between 1-7, where 1 means "very low effort" and 7 means "very high effort."

Some versions use a 1-5 scale. Some invert the scale so higher numbers represent lower effort.

CES Formula

Sum of all effort ratings / number of responses

A lower average indicates less effort required. That's the desired outcome. Some organizations calculate CES differently. They measure the percentage of customers who report low effort. That means ratings of 1 or 2 on a 7-point scale.

CES Benchmarks

- Good: 1.5-2.5 (on 7-pt scale)

- Excellent: <2

- Industry Average: Lower = better

-

Pros and Cons of CES

Next, we need to consider the pros and cons of CES.

Pros

- CES is predictive of loyalty. CES directly points to friction points in processes and systems. This gives you actionable insights.

- CES is particularly relevant to IT and software businesses (SaaS). Effort reduction aligns naturally with IT's goals of efficiency and creating a positive user experience (UX). Low-effort experiences are more likely to drive repeat usage and adoption. CES helps predict this behavior and can actively be used to design or modify services. CX teams can use it the same way.

Cons

- "Effort" means different things to different people, depending on their technical skills and expectations. This subjective interpretation is a challenge. CES doesn't capture whether customers feel valued or measure emotional connection to your services.

- While reducing effort is essential, it doesn't tell the whole story of customer experience. CES has less historical data than CSAT and NPS resulting in a narrower area of focus. There are fewer industry benchmarks.

-

Common CES Mistakes and How to Avoid Them

-

Misinterpreting What "Effort" Means

Customers vary in skill level and expectations, so their sense of "effort" isn't uniform.

Solution: Combine CES results with qualitative comments to uncover what effort truly means in your context.

-

Measuring Effort at the Wrong Touchpoints

Using CES in low-effort scenarios produces flat, meaningless data.

Solution: Apply CES where friction is most likely, such as self-service portals, provisioning requests, or support hand-offs.

-

Focusing Only on Low Effort, Not Experience Quality

Reducing effort is important, but efficiency alone doesn't guarantee satisfaction or loyalty.

Solution: Balance CES improvements with CSAT and NPS insights to ensure both ease and emotional connection are addressed.

-

Key Differences: A Comparative Analysis of CSAT vs. NPS vs. CES

Understanding CSAT vs NPS vs CES means looking at how these metrics differ across several important areas.

Key Aspect |

CSAT |

NPS |

CES |

Primary Focus |

Transaction satisfaction |

Relationship loyalty |

Ease of experience |

Time Orientation |

Immediate/short-term |

Long-term |

Immediate/short-term |

Question Type |

Direct satisfaction rating |

Likelihood to recommend |

Effort assessment |

Scale |

1-5 or 1-10 |

A simple 0-10 scale |

1-5 or 1-7 |

Calculation |

Percentage satisfied |

% Promoters - % Detractors |

Average score or % low effort |

Best For |

Evaluating specific interactions |

Measuring overall relationship |

Identifying process friction |

Actionability |

High for tactical improvements |

Moderate (requires follow-up) |

High for operational improvements |

Predictive Power |

Low for loyalty |

Moderate to high |

High for repeat behavior |

Now, let's compare and contrast them in business scenarios:

-

Survey Format and Timing

- CSAT surveys work best right after specific interactions. Think ticket closures, system upgrades, or training sessions. They capture fresh impressions. But you should use them carefully to avoid survey fatigue.

- NPS surveys are typically sent less frequently, perhaps quarterly or twice a year. This assesses the evolving relationship over time.

- CES surveys are transactional. They should appear when customers complete processes that require effort.

-

Use Case Scenarios

For CX teams, call centers, and IT service desks:

- CSAT excels at measuring satisfaction with individual ticket resolutions (or customer calls, emails, or messages).

-

NPS better evaluates whether business units view IT as a strategic partner. For example:

- Would they "recommend" IT to other departments?

- Would they support IT in budget discussions?

- CES is ideal for assessing self-service portals. It works well for provisioning workflows, or any process where reducing friction helps increase positive business outcomes.

-

Correlation of CSAT, NPS and CES with Business Outcomes

- All three metrics correlate with business outcomes in some ways; however, some more than others. But CES often shows the strongest connection to repeat usage and loyalty in operational contexts, including customer service and IT. This is critical for any organization trying to drive adoption of new systems or services.

- NPS may correlate better with strategic decisions. For example, think about budget allocation or willingness to participate in innovative initiatives.

- On the other hand, CSAT serves as an early warning system for acute problems. But it doesn't always predict long-term trends.

How to Choose the Best Metric for Your Team

Selecting between CSAT vs NPS vs CES depends on several factors. Or you might determine how to use them together. Either way, your operational context and objectives matter.

-

Define Your Most Important Objective

Start by clarifying what you're trying to achieve:

- If your goal is operational excellence and process improvement, CES should be your priority.

- If you're trying to transform IT's reputation from a cost center to a strategic partner, NPS provides insight into relationship quality.

- If you need to maintain service quality across hundreds of daily interactions, CSAT offers the tactical feedback you need.

-

Consider Your Audience

Internal IT organizations face different dynamics than customer-facing technology companies. Asking employees to rate their likelihood to recommend IT to a colleague may feel strange.

After all, IT is their only in-house or outsourced option. In these cases, you might reframe NPS. Think of it as a willingness to advocate for IT in strategic discussions. Or you might prioritize CES and CSAT instead because these may be more meaningful.

External-facing technology services may find NPS more naturally aligned with their situation, especially in markets where customer choice and word of mouth drive adoption. Competitive dynamics and growth objectives make NPS a good fit.

-

Evaluate Your Maturity and Resources

Organizations new to experience measurement should start simple. CSAT provides immediate, actionable feedback. You don't need sophisticated analysis of CSAT results. As your measurement capabilities mature, you can add CES. This helps identify process improvements. Eventually, you can add NPS for strategic insight.

Consider your ability to act on the data you collect. There's no point in measuring effort scores if you can't redesign workflows. You need the resources and authority to make changes. Similarly, NPS loses value if leadership doesn't factor customer loyalty into strategic planning.

-

Assess Measurement Frequency Needs

If you need continuous feedback on high-volume interactions, CSAT and CES can be ideal because they're transactional. NPS is relationship focused. It shouldn't be used too frequently.

Quarterly or semi-annually is typically sufficient. Remember that over-surveying with NPS can dilute its meaning, and it reduces response rates, too.

-

Account for Cultural and Geographic Factors

Global CX and IT organizations must recognize that cultural norms significantly influence scoring behavior. Nordic countries tend to score conservatively. Some cultures rate more generously. If you're comparing scores across regions, you may need regional benchmarks. Don't rely on a single global standard.

CES may suffer less from cultural variation than CSAT or NPS. Effort is more objectively defined from one country to the next.

Is There a "Best" Overall CX Metric?

The short answer is no because there's no single best metric for all situations. Every metric illuminates a different aspect of customer experience. The "best" choice depends on your specific context, objectives, budget, team, and constraints.

That said, operational feedback increasingly suggests that CES deserves more attention than it typically receives. This is particularly true in IT environments. Technology users often value efficiency and ease over being wowed by over-complexity.

A system that gets out of their way is powerful. It allows them to accomplish tasks with minimum friction. This frequently outperforms a system with impressive features but clunky workflows.

For IT leaders focused on driving adoption and reducing support burden, CES provides particularly actionable insights.

However, a mature measurement approach typically combines metrics. You don't just choose one:

- Consider deploying CSAT for transactional feedback on service desk interactions.

- Use CES for major processes, such as provisioning or access requests.

- Use NPS for quarterly pulse checks on the overall IT-business relationship.

This multi-metric approach gives you both perspectives. You get the tactical detail needed for continuous improvement. And you get the strategic perspective required for long-term planning.

The key is ensuring each metric serves a clear purpose. Each one should drive specific actions. Collecting data for data's sake creates survey fatigue and wastes resources.

Every metric you deploy should:

- Have a defined owner

- Have a regular review schedule

- Clearly connect to improvement initiatives

Conclusion: Which is the Best Score? CSAT vs. NPS vs. CES

Understanding the differences between CSAT vs NPS vs CES empowers CX and IT leaders to build better measurement systems. You generate genuine insight rather than just numbers.

CSAT excels at capturing satisfaction with specific interactions. This makes it ideal for operational monitoring and quality assurance.

NPS provides a strategic perspective on relationship health and loyalty. But its application requires thoughtful adaptation in some IT contexts.

CES identifies friction in processes and systems. It directly supports efficiency and adoption objectives.

The most effective approach typically involves strategically using multiple metrics. Each one aligns with specific objectives and touchpoints in your customer journey. Start with one metric aligned to your most pressing need. Make sure you can act on the insights it provides. Then expand your measurement portfolio as your capabilities mature.

Remember that metrics are means to an end. The goal isn't achieving a perfect score on any single metric. It's using customer feedback to drive continuous improvement. It's about building stronger relationships and demonstrating any team's value to the organization.

When you choose the right metrics and act on the insights they provide, you transform customer experience measurement. It goes from being a reporting exercise to being a strategic capability. One that drives real business impact.

For support leaders ready to elevate their customer experience measurement, the question isn't CSAT vs NPS vs CES. It's about leveraging the unique strengths of each customer-centric metric, building a comprehensive understanding of your customers' needs, and creating services that truly deliver value.

Customer Service Resources

- Top 10 Customer Satisfaction Goals, Including CSAT

- Call Center Quality Assurance: Best Practices and Strategic How-To Guide

- 15 Top Strategies to Ensure Customer Satisfaction

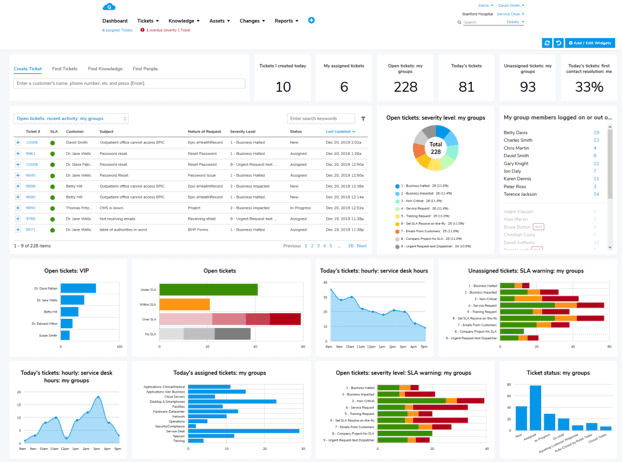

Giva Can Help Streamline Your Support Services Increasing Satisfaction

Giva's help desk and customer service products can help you stay focused on creating happy customers instead of navigating complex software:

- Improve First-Contact Resolution and Response Times: Instant visibility to all open issues, empowering fast resolution, resulting in higher first-contact resolutions and shorter response times

- Increase Customer Satisfaction with Proactive Issue Monitoring: Threshold monitoring quickly identifies emerging trends, providing actionable insights before customer satisfaction is impacted

- Optimize Agent Performance: With real-time data on agent performance, supervisors can dynamically reassign tasks, adjust staffing, and optimize workflows, ensuring that agents are always focused on priority cases

Get a demo to see Giva's solutions in action or start your own free, 30-day trial today!